Machine code, also known as machine language, is the lowest-level programming language directly understood by a computer’s central processing unit (CPU). It consists of binary instructions (1s and 0s) that the CPU executes to perform specific tasks. Each instruction in machine code corresponds to a precise operation—whether it’s moving data, performing calculations, or making decisions.

Unlike higher-level programming languages, machine code is architecture-specific. This means that the machine code for an Intel processor differs from that of an ARM or MIPS processor. The way these instructions are encoded depends entirely on the CPU design.

But before diving deeper into machine code and binary, let’s explore the numeral systems that underpin how computers process data.

The Decimal System #

The decimal numeral system is the one we use every day. It’s the standard system for representing both whole and fractional numbers.

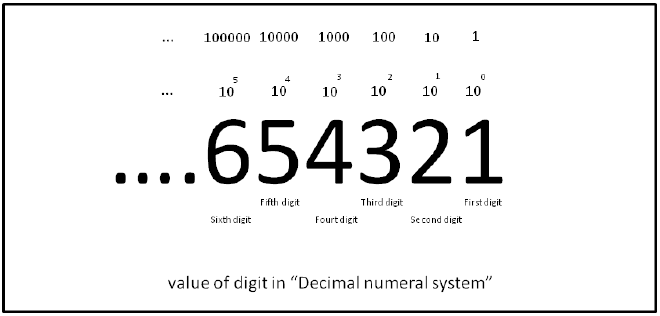

In the decimal system, there are ten digits (0 through 9), which is why it’s called “base 10.” This system relies on positional notation, where the position of each digit determines its value based on powers of 10.

For example, in the number 325, the digit 3 represents 3 * 10^2 (=300), 2 represents 2 * 10^1 (=20), and 5 represents 5 * 10^0 (=5).

Why base 10?

Base 10 is intuitive because humans historically used their ten fingers to count. Over time, this system became the default for human mathematics and counting.The Binary System #

Now let’s transition to the system computers understand: binary.

Binary, or base 2, uses only two digits: 0 and 1. Just as decimal is based on powers of 10, binary is based on powers of 2.

For instance, in binary, the number 101 represents:

1 * 2^2(4)0 * 2^1(0)1 * 2^0(1)

Summing these values gives us 5 in decimal form.

You can think of it as enabling or disabling each position in positional notation.

Why use base 2, and not base 6?

Computers and digital systems use binary because electronic devices naturally operate with two states: on and off. These states are represented as `1` (on) and `0` (off), making binary an efficient system for digital electronics.Converting Decimal to Binary #

Let’s walk through how to convert a decimal number into binary.

To illustrate, let’s use the number 58. The process involves repeatedly subtracting powers of 2, starting with the largest power less than the given number.

- The largest power of 2 less than 58 is

32(2^5). Subtracting 32 leaves us with 26. This corresponds to1 * 2^5. - Next, the largest power of 2 less than 26 is

16(2^4). Subtracting 16 leaves us with 10. This corresponds to1 * 2^4. - Then, the largest power of 2 less than 10 is

8(2^3). Subtracting 8 leaves us with 2. This corresponds to1 * 2^3. - We skip

2^2(4) because it doesn’t fit, so it becomes0 * 2^2. - Finally,

2^1(2) fits perfectly, leaving no remainder. This corresponds to1 * 2^1.

Putting it all together:

$$ 1 * 2^5 + 1 * 2^4 + 1 * 2^3 + 0 * 2^2 + 1 * 2^1 = 111010 $$

So, 58 in decimal equals 111010 in binary.

How can I convert decimals to binary quickly?

- Use an online tool like Decimal to Binary Converter.

- Manually divide the decimal number by 2, recording the **integer quotient** and the **remainder** (which will always be 0 or 1). Repeat the division using the new quotient until the quotient becomes 0. Write the remainders in reverse order (last remainder first) to form the binary number.

In the decimal system, positional notation comes so naturally to us that we barely think about it. For instance, you instinctively know that the 7 in 237,325 represents 70,000. With binary, however, it takes a bit more effort to understand how each position contributes to the number.

Understanding machine code starts with appreciating the numeral systems it’s built upon. The simplicity of binary—using just 0s and 1s—forms the foundation of all modern computing. By mastering concepts like decimal, binary, and positional notation, you unlock the logic behind how computers process and execute instructions.

Happy computing!